Expo

view channel

view channel

view channel

view channel

view channel

view channel

view channel

MRIUltrasoundNuclear MedicineGeneral/Advanced ImagingImaging ITIndustry News

Events

- AI-Powered Mammography Screening Boosts Cancer Detection in Single-Reader Settings

- Photon Counting Detectors Promise Fast Color X-Ray Images

- AI Can Flag Mammograms for Supplemental MRI

- 3D CT Imaging from Single X-Ray Projection Reduces Radiation Exposure

- AI Method Accurately Predicts Breast Cancer Risk by Analyzing Multiple Mammograms

- Biparametric MRI Combined with AI Enhances Detection of Clinically Significant Prostate Cancer

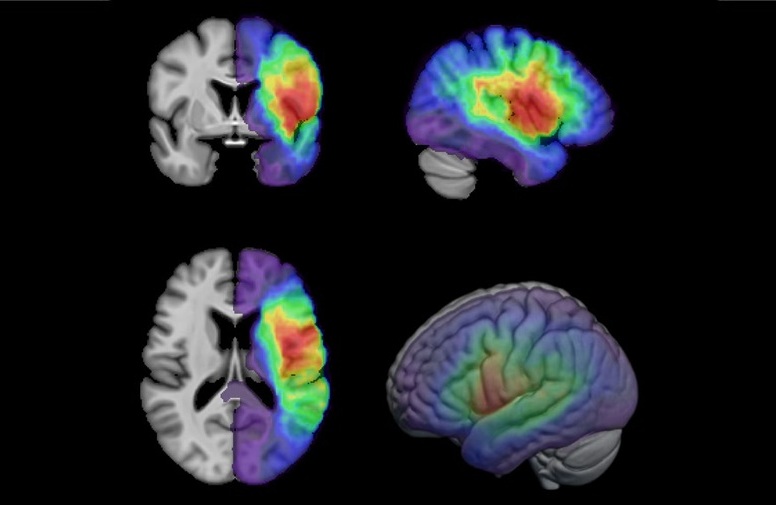

- First-Of-Its-Kind AI-Driven Brain Imaging Platform to Better Guide Stroke Treatment Options

- New Model Improves Comparison of MRIs Taken at Different Institutions

- Groundbreaking New Scanner Sees 'Previously Undetectable' Cancer Spread

- First-Of-Its-Kind Tool Analyzes MRI Scans to Measure Brain Aging

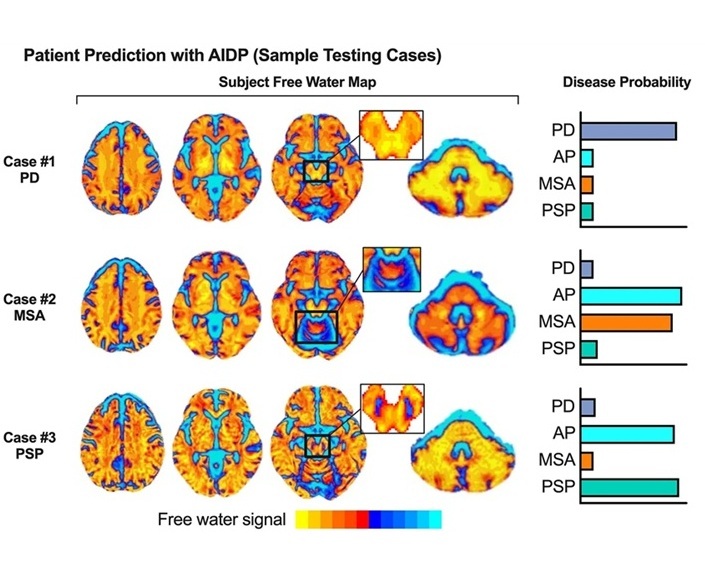

- Innovative PET Imaging Technique to Help Diagnose Neurodegeneration

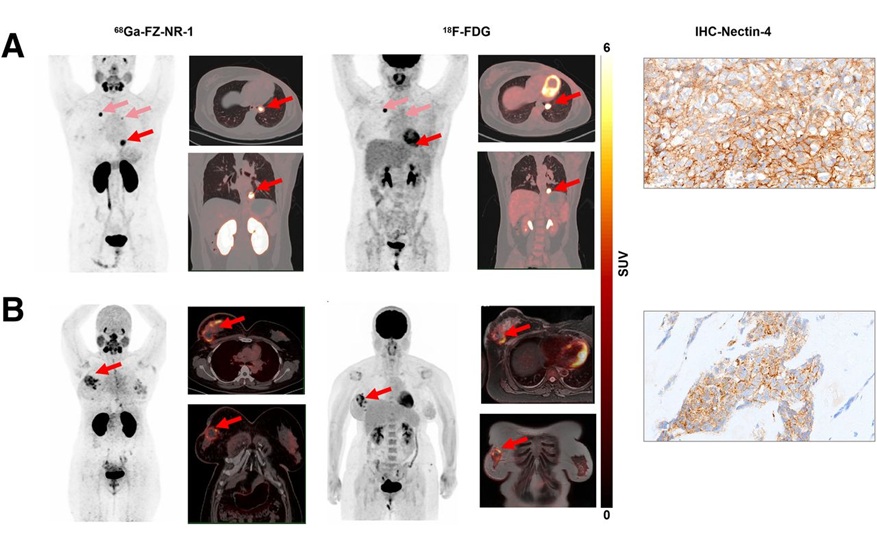

- New Molecular Imaging Test to Improve Lung Cancer Diagnosis

- Novel PET Technique Visualizes Spinal Cord Injuries to Predict Recovery

- Next-Gen Tau Radiotracers Outperform FDA-Approved Imaging Agents in Detecting Alzheimer’s

- Breakthrough Method Detects Inflammation in Body Using PET Imaging

- Artificial Intelligence Detects Undiagnosed Liver Disease from Echocardiograms

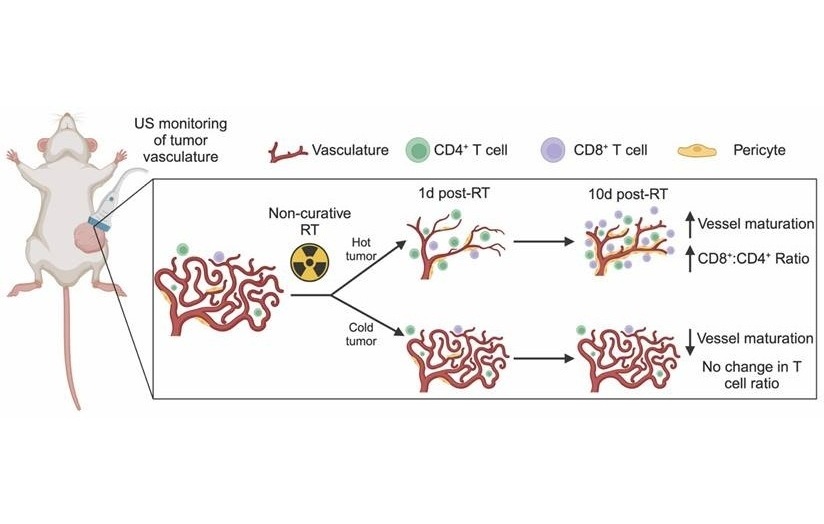

- Ultrasound Imaging Non-Invasively Tracks Tumor Response to Radiation and Immunotherapy

- AI Improves Detection of Congenital Heart Defects on Routine Prenatal Ultrasounds

- AI Diagnoses Lung Diseases from Ultrasound Videos with 96.57% Accuracy

- New Contrast Agent for Ultrasound Imaging Ensures Affordable and Safer Medical Diagnostics

- AI Reduces CT Lung Cancer Screening Workload by Almost 80%

- Cutting-Edge Technology Combines Light and Sound for Real-Time Stroke Monitoring

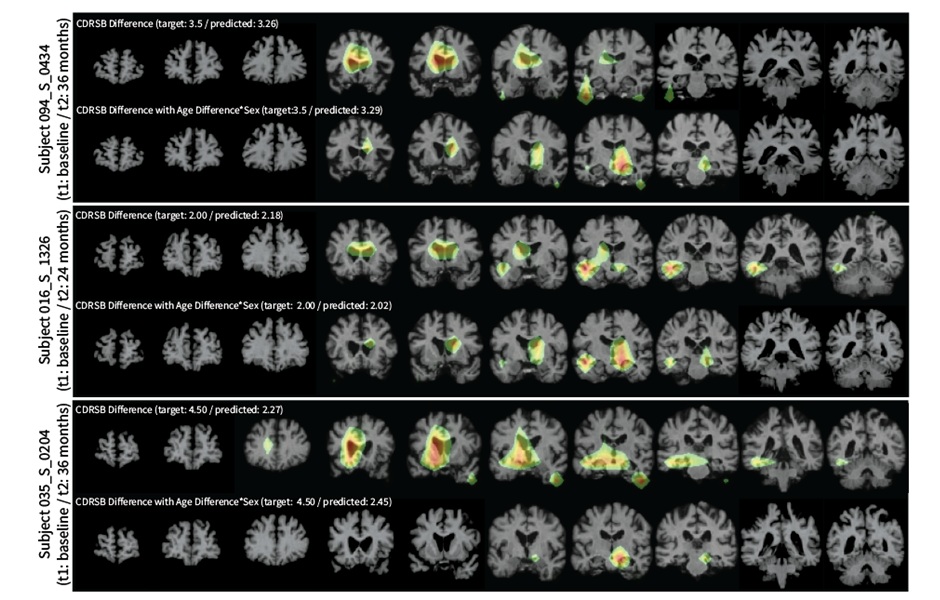

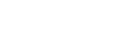

- AI System Detects Subtle Changes in Series of Medical Images Over Time

- New CT Scan Technique to Improve Prognosis and Treatments for Head and Neck Cancers

- World’s First Mobile Whole-Body CT Scanner to Provide Diagnostics at POC

- Global AI in Medical Diagnostics Market to Be Driven by Demand for Image Recognition in Radiology

- AI-Based Mammography Triage Software Helps Dramatically Improve Interpretation Process

- Artificial Intelligence (AI) Program Accurately Predicts Lung Cancer Risk from CT Images

- Image Management Platform Streamlines Treatment Plans

- AI Technology for Detecting Breast Cancer Receives CE Mark Approval

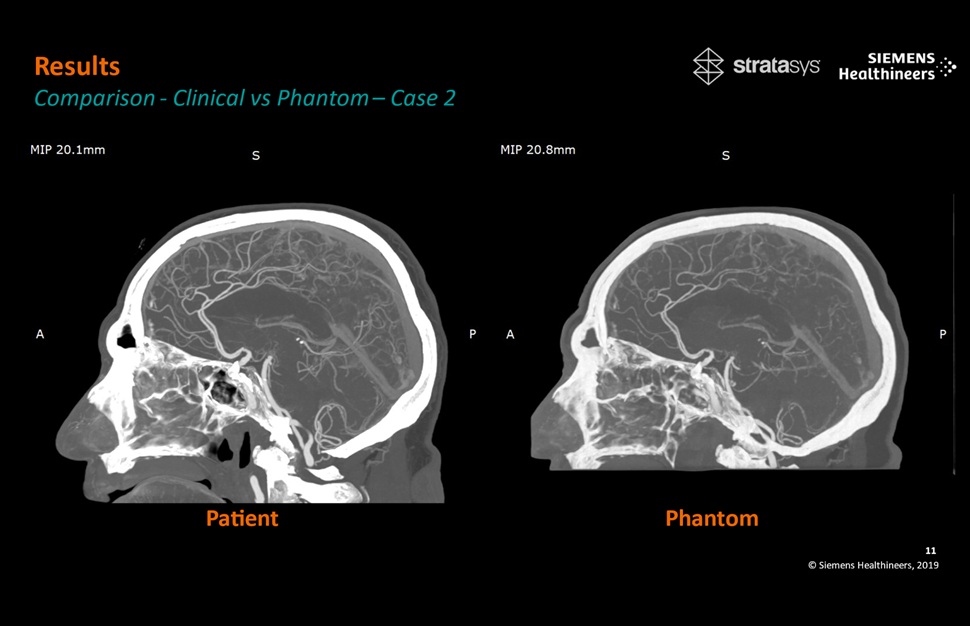

- Patient-Specific 3D-Printed Phantoms Transform CT Imaging

- Siemens and Sectra Collaborate on Enhancing Radiology Workflows

- Bracco Diagnostics and ColoWatch Partner to Expand Availability CRC Screening Tests Using Virtual Colonoscopy

- Mindray Partners with TeleRay to Streamline Ultrasound Delivery

- Philips and Medtronic Partner on Stroke Care

Expo

view channel

view channel

view channel

view channel

view channel

view channel

view channel

MRIUltrasoundNuclear MedicineGeneral/Advanced ImagingImaging ITIndustry News

Events

Advertise with Us

view channel

view channel

view channel

view channel

view channel

view channel

view channel

MRIUltrasoundNuclear MedicineGeneral/Advanced ImagingImaging ITIndustry News

Events

Advertise with Us

- AI-Powered Mammography Screening Boosts Cancer Detection in Single-Reader Settings

- Photon Counting Detectors Promise Fast Color X-Ray Images

- AI Can Flag Mammograms for Supplemental MRI

- 3D CT Imaging from Single X-Ray Projection Reduces Radiation Exposure

- AI Method Accurately Predicts Breast Cancer Risk by Analyzing Multiple Mammograms

- Biparametric MRI Combined with AI Enhances Detection of Clinically Significant Prostate Cancer

- First-Of-Its-Kind AI-Driven Brain Imaging Platform to Better Guide Stroke Treatment Options

- New Model Improves Comparison of MRIs Taken at Different Institutions

- Groundbreaking New Scanner Sees 'Previously Undetectable' Cancer Spread

- First-Of-Its-Kind Tool Analyzes MRI Scans to Measure Brain Aging

- Innovative PET Imaging Technique to Help Diagnose Neurodegeneration

- New Molecular Imaging Test to Improve Lung Cancer Diagnosis

- Novel PET Technique Visualizes Spinal Cord Injuries to Predict Recovery

- Next-Gen Tau Radiotracers Outperform FDA-Approved Imaging Agents in Detecting Alzheimer’s

- Breakthrough Method Detects Inflammation in Body Using PET Imaging

- Artificial Intelligence Detects Undiagnosed Liver Disease from Echocardiograms

- Ultrasound Imaging Non-Invasively Tracks Tumor Response to Radiation and Immunotherapy

- AI Improves Detection of Congenital Heart Defects on Routine Prenatal Ultrasounds

- AI Diagnoses Lung Diseases from Ultrasound Videos with 96.57% Accuracy

- New Contrast Agent for Ultrasound Imaging Ensures Affordable and Safer Medical Diagnostics

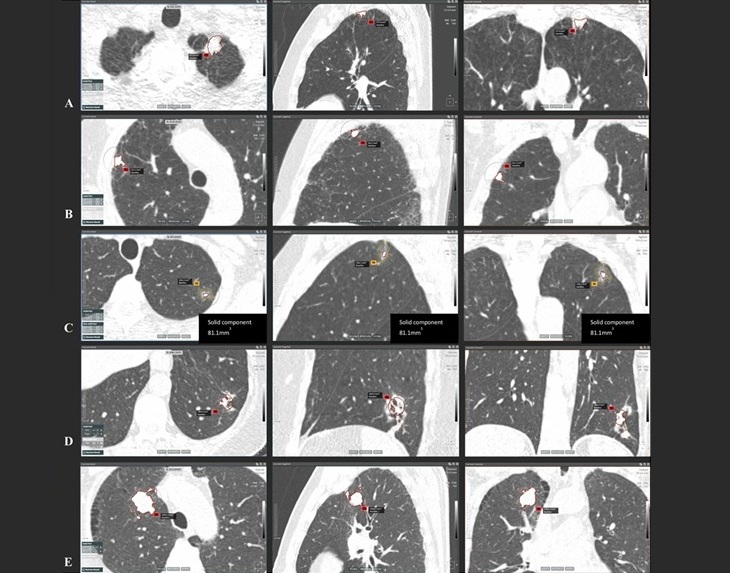

- AI Reduces CT Lung Cancer Screening Workload by Almost 80%

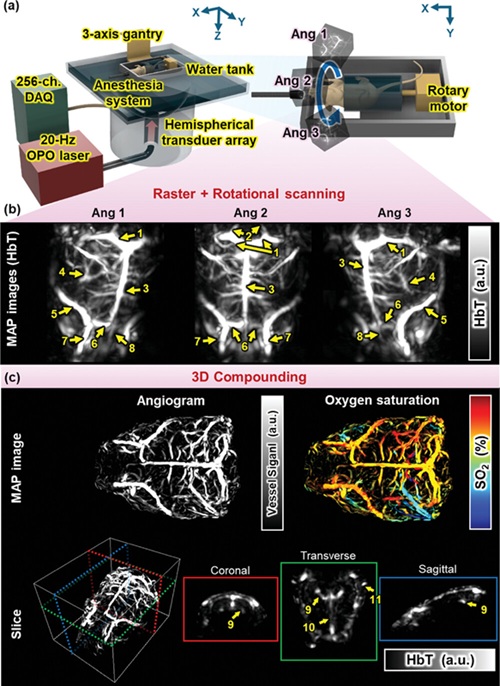

- Cutting-Edge Technology Combines Light and Sound for Real-Time Stroke Monitoring

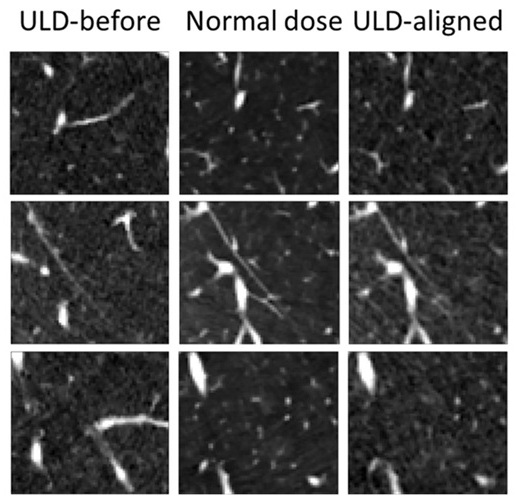

- AI System Detects Subtle Changes in Series of Medical Images Over Time

- New CT Scan Technique to Improve Prognosis and Treatments for Head and Neck Cancers

- World’s First Mobile Whole-Body CT Scanner to Provide Diagnostics at POC

- Global AI in Medical Diagnostics Market to Be Driven by Demand for Image Recognition in Radiology

- AI-Based Mammography Triage Software Helps Dramatically Improve Interpretation Process

- Artificial Intelligence (AI) Program Accurately Predicts Lung Cancer Risk from CT Images

- Image Management Platform Streamlines Treatment Plans

- AI Technology for Detecting Breast Cancer Receives CE Mark Approval

- Patient-Specific 3D-Printed Phantoms Transform CT Imaging

- Siemens and Sectra Collaborate on Enhancing Radiology Workflows

- Bracco Diagnostics and ColoWatch Partner to Expand Availability CRC Screening Tests Using Virtual Colonoscopy

- Mindray Partners with TeleRay to Streamline Ultrasound Delivery

- Philips and Medtronic Partner on Stroke Care

![Image: [18F]3F4AP in a human subject after mild incomplete spinal cord injury (Photo courtesy of The Journal of Nuclear Medicine, DOI:10.2967/jnumed.124.268242) Image: [18F]3F4AP in a human subject after mild incomplete spinal cord injury (Photo courtesy of The Journal of Nuclear Medicine, DOI:10.2967/jnumed.124.268242)](https://globetechcdn.com/medicalimaging/images/stories/articles/article_images/2025-02-24/Brugarolas_F8.large.jpg)